Unlocking the Power of Container Orchestration for Machine Learning Workflows

Unlocking the Power of Container Orchestration for Machine Learning Workflows

In the ever-evolving landscape of machine learning (ML), the demand for efficient and scalable solutions has become paramount. As organizations grapple with vast amounts of data and complex algorithms, the need for streamlined workflows has led to the rise of container orchestration platforms. This article delves into the significance of container orchestration in the realm of ML and explores how it can revolutionize the way we approach and manage these sophisticated workflows.

The Foundation of Container Orchestration

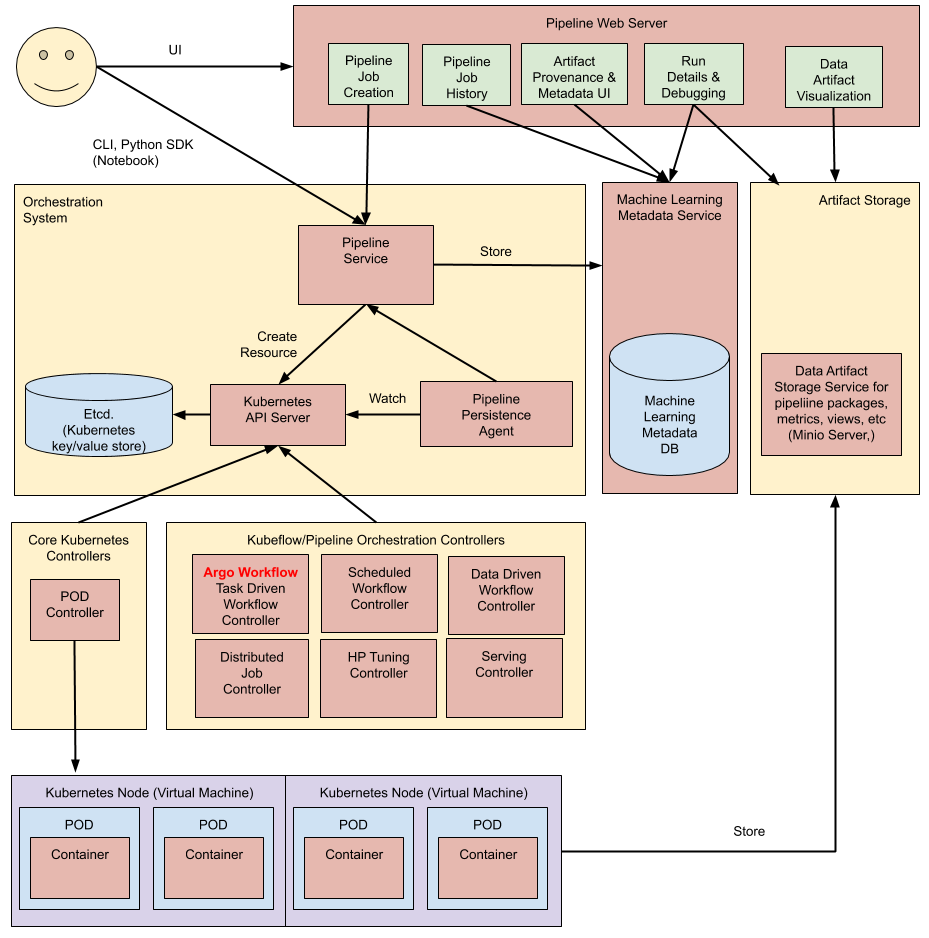

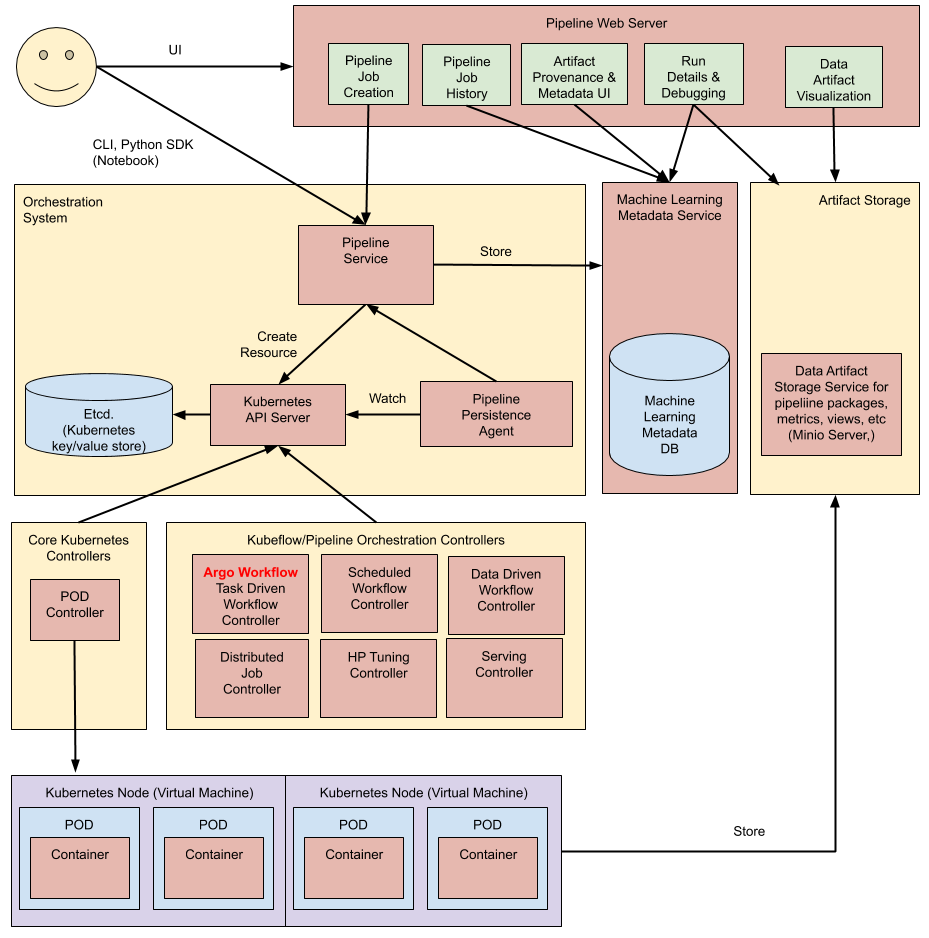

At its core, container orchestration is a mechanism that simplifies the deployment, scaling, and management of containerized applications. Containers, which encapsulate the application, its dependencies, and runtime, have gained widespread popularity for their consistency across various environments. Container orchestration tools, such as Kubernetes, provide a robust framework to automate the deployment and scaling of these containers.

Addressing Complexity in Machine Learning Workflows

Machine learning workflows often involve intricate processes, from data preprocessing and model training to deployment and monitoring. Container orchestration brings order to this complexity by providing a unified platform to manage and scale these tasks seamlessly. The ability to encapsulate each stage of the ML pipeline into containers ensures consistency and reproducibility, facilitating collaboration and experimentation.

Streamlining Collaboration with Kubernetes

Collaboration is a cornerstone of successful ML projects. Kubernetes, one of the leading container orchestration platforms, excels in fostering collaboration among data scientists, engineers, and DevOps teams. By providing a standardized environment through containers, Kubernetes allows different stakeholders to work on the same set of containers without worrying about compatibility issues. This collaboration-friendly approach accelerates the development and deployment of ML models.

Scaling Horizontally for Enhanced Performance

Scalability is a critical consideration in ML, especially when dealing with large datasets and resource-intensive computations. Container orchestration platforms enable horizontal scaling, where additional containers can be effortlessly added to distribute the workload. This not only enhances performance but also ensures that ML applications can handle varying workloads efficiently.

Kubeflow: A Tailored Solution for ML Workloads

In the quest for optimizing ML workflows, Kubeflow emerges as a dedicated platform built on top of Kubernetes. Kubeflow extends the capabilities of Kubernetes to meet the specific requirements of ML workloads. With components like Katib for hyperparameter tuning and KFServing for model serving, Kubeflow provides a comprehensive toolkit for end-to-end ML workflow management.

Integrating Kubeflow into your ML pipeline can significantly enhance its efficiency and flexibility. This open-source platform streamlines the deployment of ML models and facilitates experimentation with different configurations. To explore the full potential of Kubeflow and elevate your ML workflows, visit itcertsbox.com for in-depth resources and tutorials.

Ensuring Reproducibility and Traceability

Reproducibility is a fundamental aspect of scientific research and is equally crucial in the field of machine learning. Container orchestration platforms, including Kubernetes and Kubeflow, provide a standardized environment for ML experiments. This ensures that experiments can be replicated with the same configurations, making it easier to trace the evolution of models and identify potential improvements.

Optimizing Resource Utilization for Cost Efficiency

Efficient